Evaluación formativa con tecnologías entre docentes para el desarrollo de proyectos de innovación educativa

Formative evaluation with technologies among teachers for the development of educational innovation projects

Violeta Cebrián-Robles

Universidad de Málaga

https://orcid.org/0000-0002-6862-8270

Francisco José Ruíz-Rey

Universidad de Málaga

https://orcid.org/0000-0002-5064-6534

Manuel Cebrián-de-la-Serna

Universidad de Málaga

https://orcid.org/0000-0002-0246-7398

Fernando Manuel Lourenço-Martins

Centro de Investigação e Inovação em Educação (inED), Escuela Superior de Educación de Coimbra (ESEC), Politécnico de Coimbra

https://orcid.org/0000-0002-1812-2300

RESUMEN

Es pertinente abordar los proyectos de innovación educativa de forma colegiada entre los docentes, analizando y evaluando con criterios e indicadores de calidad sus posibles obstáculos e impactos. Para ello, nada mejor que las rúbricas digitales por su visión más objetiva en la aplicación de criterios y por sus posibilidades digitales que facilitan el intercambio y discusión de estos criterios en redes profesionales. Existe una literatura abundante sobre el uso de rúbricas digitales en la formación inicial, no así en la formación permanente. El estudio que se presenta aquí es una investigación correlacional donde analiza el uso de rúbricas digitales en la evaluación entre pares de docentes en activo, para desarrollar competencias para la evaluación de proyectos de innovación de sus contextos de prácticas. La muestra por conveniencia de 70 docentes en un programa de formación permanente donde se analizan tres variables: las evaluaciones entre pares, las calificaciones finales y la evaluación del docente a los proyectos de innovación educativa presentados. Los resultados indican que el uso de las rúbricas constituye una metodología que facilita compartir criterios y su aplicación en comunidades profesionales, además se comprueba que existen diferencias estadísticas significativas entre los grupos A y B en las medias de las tres variables del estudio. El estudio también presenta otras experiencias y ejemplos de rúbricas aplicadas a diferentes contextos de formación de docentes y recursos de rúbricas en internet.

PALABRAS CLAVE

Trabajo colaborativo; formación permanente; evaluación; rubricas digitales; innovación educativa.

ABSTRACT

It is appropriate to approach educational innovation projects in a collegiate manner among teachers, analysing and evaluating their possible obstacles and impacts with quality criteria and indicators. For this purpose, there is nothing better than digital rubrics because of their more objective vision in the application of criteria and because of their digital possibilities that facilitate the exchange and discussion of these criteria in professional networks. There is abundant literature on the use of digital rubrics in initial training, but not in lifelong learning. The study presented here is a correlational research study that analyses the use of digital rubrics in the peer evaluation of in-service teachers to develop competences for the evaluation of innovation projects in their contexts of practice. The convenience sample of 70 teachers in an in-service training programme where three variables are analysed: peer evaluations, final grades and the teacher’s evaluation of the educational innovation projects presented. The results indicate that the use of rubrics is a methodology that facilitates the sharing of criteria and their application in professional communities, and that there are significant statistical differences between groups A and B in the means of the three variables of the study. The study also presents other experiences and examples of rubrics applied in different teacher education contexts and rubric resources on the Internet.

KEYWORDS

Collaborative work; lifelong learning; assessment; digital rubrics; educational innovation.

1. INTRODUCTION

Lifelong learning and updating throughout life is a necessity felt in all professions, and especially in the acquisition of new skills promoted by the transformation of an increasingly digitized world. All work activities as well as the life of citizens itself are being affected by emerging technologies, and where teachers recurrently and even more so after Covid19, require training for the efficient use of the integration of technologies in their classrooms and centers (Qayyum, 2023). In spite of the pandemic, classes and education went ahead sometimes with significant technological infrastructure deficiencies, which is why they have suffered significant setbacks in basic subjects, according to the report by Jordan et al. (2021), producing a drop in basic skills (mainly reading and arithmetic) and that according to the study by Alban-Conto et al. (2021) the distance learning developed has not been sufficient to avoid this delay.

Faced with this adversity and during Covid19, many teachers sought ingenious solutions, undoubtedly effective for a disruptive situation such as the one experienced, and that today in a post-pandemic stage, it is time to review, analyze and seek solutions to those gaps in the provision of resources to the centers, as well as in the competence gaps detected in teachers; and equally, and less talked about but no less important, the proper use of technologies by students, both in the prevention of dangers - cyberbullying, cyberbullying, identity security, academic plagiarism, addiction to cell phones, etc. (Cebrián-Robles, “The use of technology by students, both in the prevention of dangers - cyberbullying, cyberbullying, identity security, academic plagiarism, addiction to cell phones, etc.). - (Cebrián-Robles, 2016; Cebrián-Robles et al., 2020; Gallego-Arrufat et al., 2019) as the advantages -improvements in production, storage, communication, transfers, collaborative work...- that technologies and internet provide us and were verified by all teachers during the Covid19 (Reuge et al., 2021). Therefore, after the pandemic, there is a greater need for ongoing training on the use of technologies for a successful change in classroom practices, training in the change of attitude of teachers to face adversity and generate educational innovation projects in general, but especially on the use of technologies as a means and an end, which allows transforming schools and their classrooms, and facilitates new ways of building knowledge in communities of practice and professional networks. In the words of Hargreaves & Fullan (2020) it would not be appropriate to return to normality, but to use the experience as an opportunity to “transform” education with the use of technologies.

Collaborative and collegial work among teachers is consubstantial to teaching and professional development, especially when we face common and shared problems, we better address changes and educational innovation, since teacher professionalization is better strengthened through collaboration (Hargreaves and O’Connor, 2020). It is true that teaching is an individual exercise in the classroom; however, it requires prior collaboration and coordination and subsequent analysis with the educational community of the center. The complexity of the challenges we currently face, such as the case of the implementation of technologies in the classroom, are best solved through collaboration and the use of professional networks for the shared evaluation of experiences and best practices (Lazarová et al., 2020; Cebrián-Robles et al., 2020). Intracenter collaboration is easier to generate than intercenter collaboration (Cebrián-Robles et al., 2022); although the benefits of the latter with technological networks currently allow, in addition to efficiency in collaborative work, also new ways of sharing teaching experiences (Ruiz-Rey et al., 2021).

One of the tools and methodologies that have best facilitated formative assessment by competencies and in hybrid models have been digital rubrics (Pérez-Torregrosa et al., 2022b). In the literature there are literature review studies on their impact on learning (Cebrián de la Serna & Bergman, 2014; Raposo-Rivas & Cebrián-de-la-Serna, 2019), which with the rise of emerging technologies - case of AI - opens new challenges for learning assessment processes, and new lines of research in educational technology. We can find different solutions and tools on the Internet, where most of them allow working in distance or hybrid modalities, as well as sharing criteria and generating rubrics jointly among teachers, thus facilitating a process of exchange of rubrics and good educational practices. Likewise, infographics, videos and open resources can be found on the Internet on the use of these tools and their possible methodologies (Cebrián Robles, 2021).

In education we need objective rubrics with criteria and quality standards that allow for easier evaluation of student learning competencies and educational programs, especially in those aspects that are always complex and not entirely resolved, such as the evaluation of learning by competencies, now with the use of technologies, which therefore requires training on digital competencies and new forms of evaluation (Martín et al., 2022). Topics such as improvement objectives for teachers as well as methodologies to achieve common goals and the exchange of good practices.

The research presented here focuses on a continuing education program for practicing teachers at all levels of education in Ecuador during the 2018-2019 academic year. Within the module on evaluation of learning with technologies, within a master’s degree on technologies applied to education, exercises are proposed to teachers that are consistent with what they are intended to learn. In this case, we propose the construction of an educational innovation project in teams as the final product of the course to transform their classrooms, and peer evaluation of these projects, in such a way that also allows the acquisition of learning about the indicators and elements of an innovation project, to discuss and reach consensus on these elements in collaborative teams, and at the end, quality criteria are applied for their evaluation in an equally collaborative way -peer evaluation- through digital rubrics, in the idea that an experience in training received on how to use technological tools in the teachers’ own learning, is one more key to success for them to reproduce it in their classes in the future.

There are previous works on the use of technologies for formative assessment, and especially with the use of digital rubrics (Martínez-Romera et al., 2016; Cebrián-de-la-Serna, 2014; 2018; Cebrián-Robles et al., 2018; Pérez-Torregrosa et al., 2022a), likewise studies on peer assessment as a methodology for learning shared teaching skills and under collaborative work, such as the study by Masek et al, (2021) when they analyzed the learning of a group of students in initial teacher training, where the rubric evaluated self-evaluation and peer evaluation practices, proving that it promoted the development of professional competencies of future teachers.

This study, like the previous ones, is very interesting, except that it is most widely applied in the learning contexts of students in initial training, but not in lifelong learning, where there are not many studies. For this reason, the present research aims to find out whether the peer assessment methodology among in-service teachers using the Corubric.com rubric, which is the product of an R+D+i project [1], allows us to analyse how close or far they are from the expert teacher’s assessment depending on the final assessment variable. The research question, therefore, consists of analysing whether there is a relationship between the results of the overall grade of the subject individually with the score of the peer assessment compared to the teacher.

2. METHOD

The research design of correlational statistics comparing samples of the three variables used in the study, such as: a) the results of the peer evaluation of the educational innovation project presented by each team, b) with the evaluation of the teacher and c) the final individual evaluations of each participant.

The population studied and the non-probabilistic and convenience sample (Cohen et al., 2011) was 70 teachers, as well as a collaborative work team of about four to five participants. Both groups were chosen according to their qualifications at the time of enrollment to have an enrollment in a continuing education master’s degree during 2018, where a rubric was applied as a newly validated proof of concept.

The rubric was validated during the 2017-2018 academic year by expert teachers in a framework project on electronic assessment of competencies among eight universities in five Ibero-American countries. The validation process has been developed in a previous study and the reliability of the rubric was calculated using Cronbach’s alpha (α), whose value is 0.934 (Fernández-Medina et al., 2021).

The data collection technique and instruments consisted of using a digital rubric that allows peer assessment, and exporting the results of this assessment in Excel for subsequent analysis using SPSS version 24. This platform is oriented towards formative and collaborative assessment, hence its name CoRubric.com. The reader can get to know and use this validated rubric from its public rubric database (https://acortar.link/U1NgYN). Among the most relevant functions of this platform is the possibility of developing all the techniques of formative assessment (peer assessment, team assessment, self-assessment, ipsative assessment and 360º assessment) with qualitative data (annotations collected on why the criteria were applied, conversations on the assessment process, etc.) and quantitative data (weighted averages, group scores, team assessments, group situation in terms of the best and worst values of indicators, competencies and evidences, export of grades and conversations, etc.).

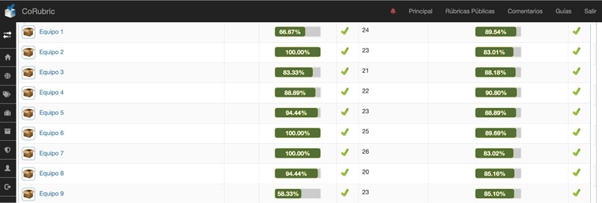

CoRubric.com is free and open access with the possibility for a teacher to share part of the evaluation or to be invited to evaluate only one student (in the case of center tutors in internships), and the possibility of integrating its access with external links from the tasks in any known LMS platform. Figure 1 shows the teacher’s grades on the left and the students’ averages on the right of this study, where the data export in excel shows the individual values of what each user has evaluated to a team, as in this case, or to other peers.

Figure 1. Teacher evaluation -left column- and peer evaluation -right column- in the Corubric.com platform.

3. RESULTS AND DATA ANALYSIS

We analysed the variables “teacher’s mark for the exhibition”, “peer mark” and “final mark” in 70 students. A Cronbach’s Alpha value of 0.679 was obtained, which can be considered acceptable. Cronbach’s Alpha provides a measure of the internal consistency of the instrument, aiming to measure to what extent all the questions in the questionnaire measure the same concept (Tavakol and Dennick, 2011).

The Cronbach’s alpha values are classified as follows:

Unacceptable (α < 0.60); weak (0.60 ≤ α < 0.70); reasonable (0.70 ≤ α < 0.80); good (0.80 ≤ α < 0.90); and excellent (α ≥ 0.90) (Hill and Hill, 2002, p. 149).

Comparison between groups (A and B) was performed using the independent samples t-Student test, after validation of the normality assumption (Pallant, 2011). The assumption of normality for each of the univariate dependent variables was examined using the Kolmogorov-Smirnov test. When the normality assumption was not verified for each dependent variable, considering n ≥ 30, and using the Central Limit Theorem (Marôco, 2021), the assumption was assumed. In turn, the effect size was determined using Cohen’s d, and the classification was as follows: small effect (d < 0.20); moderate effect (0.20 ≤ d < 0.80); and large effect (d ≥ 0.80) (O’Donoghue, 2013). All statistical analyses were performed using IBM SPSS Statistics software (version 25, IBM USA), for a significance level of 5 % (p < 0.05).

Table 1. Descriptive statistics and comparison between groups (A and B).

|

Group |

Mean |

SD (Standard deviation) |

t |

p |

d |

Effect size (ES) |

|

|

Final Assessment |

A |

9.35 |

0.72 |

–2.29 |

0.026 |

0.558 |

moderate effect |

|

B |

9.67 |

0.34 |

|||||

|

Professor Assessment |

A |

8.74 |

1.44 |

–7.09 |

0.001 |

0.529 |

moderate effect |

|

B |

9.35 |

0.70 |

|||||

|

average peer rating |

A |

8.71 |

0.27 |

–2.45 |

0.001 |

1.616 |

large effect |

|

B |

9.05 |

0.11 |

The Student’s t-test for the comparison of independent sample means compares in our case the means of the teacher’s and peers’ exposure ratings, obtaining a bilateral significance that indicates that the difference in means is significant. Thus, it can be seen that the peers assess with lower marks than the teacher (see table 2).

Table 2. Independent samples T-test on the three variables mentioned above according to class groups.

|

Class group |

N |

Media |

|

|

Final Assessment |

A |

37 |

9,3477 |

|

B |

33 |

9,6742 |

|

|

Professor Assessment |

A |

37 |

8,7405 |

|

B |

33 |

9,3485 |

|

|

A |

37 |

8,71 |

|

|

average peer rating |

B |

33 |

9,05 |

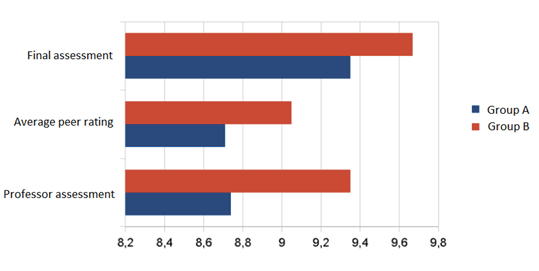

In all variables it is observed that group B outperforms group A in the scores of all three variables (teacher’s marks, peer marks and final marks). In general terms, students in the peer evaluation score slightly lower than the teacher, with a slight difference in favour of group B, i.e. group B evaluates their peers better (9.05 to 9.35), getting closer to the teacher’s mark. In the case of group A, it is closer to the teacher’s grade, being lower (8.71 to 8.74). The independent samples test confirms this statement that the teacher gives better marks than the peers, as bilateral significance values below 0.05 are given, which indicates that this difference in means in the 3 variables is significant. Figure 2 clarifies the information a little more.

Figure 2. Differences in means between the variables teacher evaluation, peer assessment and final assessment.

In the research we found that despite group differences in their initial programme entry scores, the rubric has a positive impact on all groups. The same happened in other studies but with teachers in initial training and with quasi-experimental designs where the entry level or cut-off level was not a reason for the rubric methodology to have an impact on all groups, as happened in the work of Cebrián de la Serna, et al, (2014), where three groups -two experimental and one control- had been chosen according to their access grades, with the control group having the highest grade upon entering the programme, and in the three groups the impact of the rubric was identified, the grades of the control group were more dispersed and in the experimental groups with a lower initial grade, the final results of the grades were more homogeneous.

The difference in our study to the one mentioned above is that both groups differentiated by grades were offered the same methodology with rubric; therefore, the impact of the rubric was manifested in a sample closer (group with better starting grade) or further away (group with lower starting grade) to the programme teacher’s grades, and where it can also be seen how group B outperforms group A in the scores of the three variables (teacher grades, peer grades and final grades).

We also found in the peer evaluation versus the teacher, that at the beginning students usually score below the teacher’s grades, as has happened in this study, where peers score slightly below the teacher, with a slight difference in favour of group B, i.e. group B evaluates (9.05 to 9.35) with a better grade than their peers, getting closer to the teacher’s grade. Similar evidence is found in other studies such as Serrano-Angulo, et al., (2011); however, in initial training and with repetitions (not just one evaluation as in the present study and at the end) where the students’ evaluations are increasingly closer to the teacher’s grades until they get closer as the number of repeated tests with the same rubric increases. This leads us to think that for future work with groups of teachers we should experiment with more than one final assessment, with intermediate assessments so that teachers internalise the rubric criteria when applying it to experiential expositions and can later with an evocation of their learning analyse the experience.

We need more studies in the context of in-service teacher training, with different methodologies and research designs, that allow us to build a database with evidence that identifies a more effective model of in-service training, where digital rubrics manifest all their possibilities found in initial training (Pérez-Torregrosa, et al., 2022a), and be an object of analysis and thematic training in communities of practice, where for various reasons on-the-job training has an attraction (Chen, 2018) and digital rubric platforms can be used for this inter-centre collaboration. Undoubtedly, the digital characteristics of rubrics enable this shared networking, and facilitate debate by discussing criteria and their application to different learning situations.

Financing

[1] This study was carried out thanks to the development Proyecto I+D: “Transición digital y ecológica enla enseñanza de las ciencias mediante

tecnologías disruptivas para la digitalización de juegos educativos y su evaluación con e-rúbricas”, financiado por MCIN/AEI/10.13039/501100011033 y por la Unión Europea “NextGenerationEU”/PRTR. TED2021-130102B-I00

REFERENCES

, , , , , & (2021). Potential effects of COVID-19 school closures on foundational skills and Country responses for mitigating learning loss. International Journal of Educational Development, 87, 102434. https://doi.org/10.1016/j.ijedudev.2021.102434

, (2021). Herramientas para evaluar en línea: rúbricas digitales. en Sánchez-González, M. (coord.). #DIenlínea UNIA: guía para una docencia innovadora en red. Universidad Internacional de Andalucía, 118-130. https://doi.org/10.56451/10334/6110

, y (2022). La colaboración entre los centros educativos y los principales problemas frente a la pandemia. Congreso Internacional Edutec2022. Educación transformadora en un mundo digital: Conectando paisajes de aprendizaje. Organizado por el Institut de Recerca i Innovació Educativa (IRIE).

, , & (2020). Conocimiento de los estudiantes universitarios sobre herramientas antiplagio y medidas preventivas. Pixel-Bit, Revista de Medios y Educación, 57, 129–149. https://doi.org/10.12795/pixelbit.2020.i57.05

, , , & (2018). Impacto de una rúbrica electrónica de argumentación científica en la metodología blended-learning. Revista Iberoamericana de Educación a Distancia, 21(1), 75-94. https://doi.org/10.5944/ried.21.1.18827

(2018). Modelo de evaluación colaborativa de los aprendizajes en el prácticum mediante Corubric. Revista Practicum, 3(1), 62-79. https://doi.org/10.24310/RevPracticumrep.v3i1.8275

, , & (2014). eRubrics in cooperative assessment of learning at university. Comunicar, 43, 153-161. https://doi.org/10.3916/C43-2014-15

, & y (2016). ¿Ética o prácticas deshonestas? El plagio en las titulaciones de Educación. Revista de Educación, 374, 161–182. https://doi.org/10.4438/1988-592X-RE-2016-374-330

; & (2014). Las eRúbricas en la evaluación cooperativa del aprendizaje en la Universidad. Comunicar, 43. https://doi.org/10.3916/C43-2014-15

, , , & (2018). Impacto de una rúbrica electrónica de argumentación científica en la metodología blended-learning. RIED. Revista Iberoamericana De Educación a Distancia, 21(1), 75–94. https://doi.org/10.5944/ried.21.1.18827

& (2014). Evaluación formativa con e-rúbrica: aproximación al estado del arte. Revista de docencia universitaria, 12(1), 15-22. http://dx.doi.org/10.4995/redu.2014.6427

(2018). Enhancing teaching competence through bidirectional mentoring and structured on-the-job training model. Mentoring & Tutoring: Partnership in Learning, 26(3), 267–288. https://doi.org/10.1080/13611267.2018.1511948

, , & (2011). Research methods in education. (7th Edition). Routledge.

, , , , and (2021). Assessment oral competence with digital rubrics for the Ibero-American Knowledge Space. Pixel-Bit. Revista de Medios y Educación, 62, 71-106. https://doi.org/10.12795/pixelbit.83050.

, , & (2019). Competencia de futuros docentes en el área de seguridad digital. Comunicar, 61, 57-67. http://doi.org/10.3916/c61-2019-05

, & (2020). Profesionalismo colaborativo. Cuando enseñar juntos supone el aprendizaje de todos. Morata.

, & (2020). Professional capital after the pandemic: revisiting and revising classic understandings of teachers’ work. Journal of Professional Capital and Community, 5(3/4), 327–336. https://doi.org/10.1108/JPCC-06-2020-0039

& (2002). Investigação por questionário. (2th Edition). Sílabo.

, , , & (2021). Education during the COVID-19: crisis Opportunities and constraints of using EdTech in low-income countries. Revista de Educación a Distancia -RED-, 21(65), 1-15. https://doi.org/10.6018/red.453621

(2021). Análise Estatística com o SPSS Statistics. (8th edition). ReportNumber, Lda.

, & (2016). Assessment of teaching skills with e-Rubrics in Master of Teacher Training. Journal for Educators, Teachers and Trainers, 7(2). https://jett.labosfor.com/index.php/jett/article/view/226/127

, , & (2022). Análisis de las competencias digitales docentes a partir de marcos e instrumentos de evaluación. IJERI: Revista Internacional de Investigación e Innovación Educativa, (18), 62–79. https://doi.org/10.46661/ijeri.7444

, , , & (2021). Enhancing Professional Knowledge and Self-Concept Through Self and Peer Assessment Using Rubric: A Case Study for Pre-Services TVET. Teachers. Journal of Engineering Education Transformations, 35(1), 110–115. https://doi.org/10.16920/jeet/2021/v35i1/22062

(2013). Statistics for sport and exercise studies: an introduction. Routledge.

(2011). SPSS Survival Manual. Open University Press.

, , & (2022a). Digital rubric-based assessment of oral presentation competence with technological resources for preservice teachers. Estudios sobre Educación, 43, 177-198 https://doi.org/10.15581/004.43.009

, y (2022b). ¿Qué hemos aprendido sobre la evaluación de rúbricas digitales en los aprendizajes universitarios? En Merma-Molina y Gavilán-Martín (Eds.), Investigación e innovación en el contexto educativo desde una perspectiva colectiva, 229-240. Dykinson.

, (2023). Assessing the Digital Transformation of Education Systems. An International Comparison. In Zawacki-Richter, O., & Jung, I. Handbook of Open, Distance and Digital Education. Springer Nature Singapore. p.249-266 https://doi.org/10.1007/978-981-19-2080-6

, & (2019). Technology to Improve the Assessment of Learning. Digital Education Review, 35, 1–13. http://revistes.ub.edu/index.php/der/article/view/28865/pdf

, , , , , , , & (2021). Education response to COVID 19 pandemic, a special issue proposed by UNICEF: Editorial review. International Journal of Educational Development, 87, 102485. https://doi.org/10.1016/j.ijedudev.2021.102485

and (2011). Study of the impact on student learning using the eRubric tool and peer assessment. In Education in a technological world: coomunicating current and emerging research and technological efforts, 421-427. Edit Formatex Research Center. https://cutt.ly/8t57dPt

and (2011) Making Sense of Cronbach’s Alpha. International Journal of Medical Education, 2, 53-55. http://dx.doi.org/10.5116/ijme.4dfb.8dfd